Training data and prediction requests can both contain sensitive information about people / business which has to be protected. How do you safeguard the privacy of the individuals? What steps are taken to ensure that individuals have control of their data? There are regulations in countries to ensure privacy and security.

In Europe you have the GDPR (General Data Protection Regulations) and in California there is CCPA (California Consumer Privacy Act,). Fundamentally, both give an individual control over its Data and requires that companies should protect the Data being used in the model. When Data processing is based on consent, then am individual has the right to revoke the consent at any time.

Defending ML Models against attacks – Ensuring privacy of consumer data:

I have discussed about very briefly about the tools for adversarial training – CleverHans and FoolBox Python libraries here: Model Debugging: Sensitivity Analysis, Adversarial Training, Residual Analysis . Let us now look at more stringent means of protecting a ML model against attacks. It is important to protect the ML model against attacks, thus, ensuring the privacy and security of data. An ML model may be attacked in different ways – some literature classifies the attacks into: “Information Harms” and “Behavioural Harms”. Information Harm occurs when the information is allowed to leak from the model. There are different forms of Information Harms: Membership Inference, Model Inversion and Model Extraction. In Membership Inference, the attacker can determine if some information is part of the training data or not. In Model Inversion, the attacker can extract all the training data from the model and Model Extraction, the attacker is able to extract the entire model!

Behavioural Harm occurs when the attacker can change the behaviour of the ML model itself – example: by inserting malicious data. In this post – I have given an example of an autonomous vehicle in this article: Model Debugging: Sensitivity Analysis, Adversarial Training, Residual Analysis

Cryptography | Differential privacy to protect data

You should consider privacy enhancing technologies like Secure Multi Party Computation ,(SMPC) and Fully Homomorphic Encryption (FHE). SMPC involves multiple systems to train or serve the model whilst the actual data is kept secure

In FHE the data is encrypted. Prediction requests involve encrypted data and training of the model is also carried out on encrypted data. This results in heavy computational cost because the data is never decrypted except by the user. Users will send encrypted prediction requests and will receive back an encrypted result. The goal is that using cryptography you can protect the consumers data.

Differential Privacy in Machine Learning

Differential privacy involves protection of the data by adding noise to the data so that the attackers cannot identify the real content. SmartNoise is an open-source project that contains components for building machine learning solutions with differential privacy. SmartNoise is made of following top level components:

✔️Smart Noise Core Library

✔️Smart Noise SDK Library

This is a good read to understand about Differential Privacy: https://docs.microsoft.com/en-us/azure/machine-learning/concept-differential-privacy

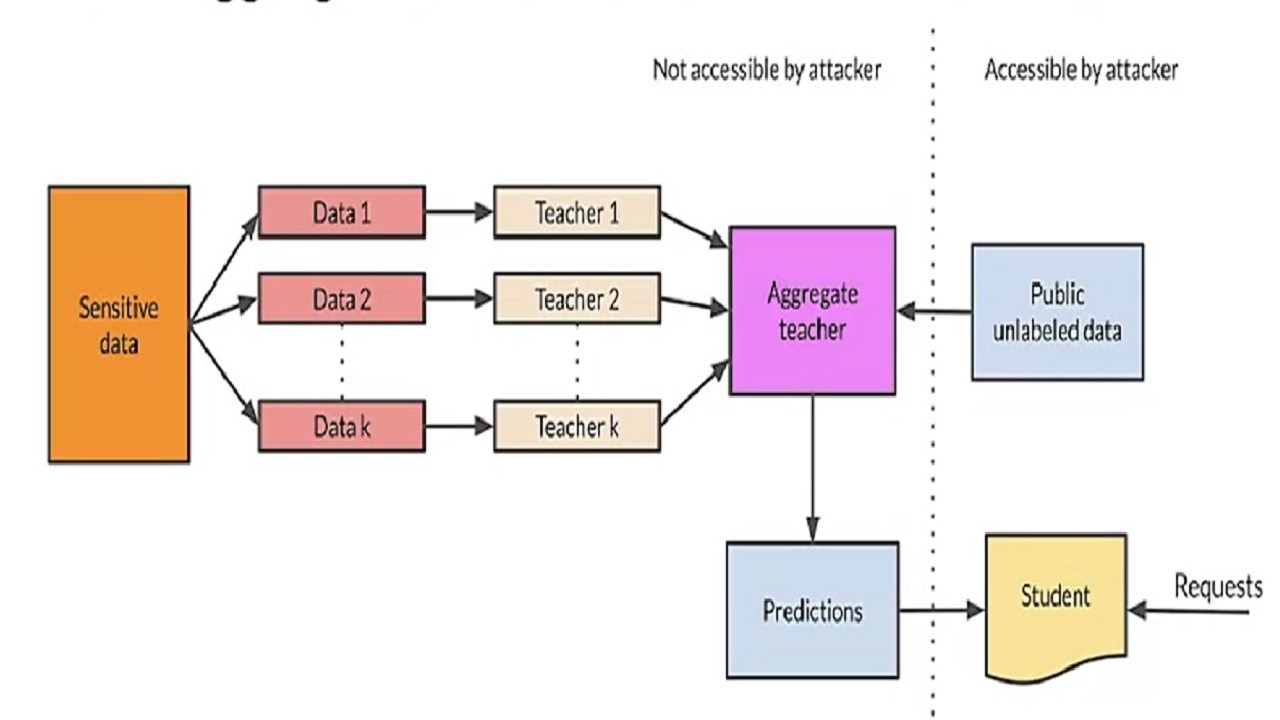

Private Aggregation of Teacher Ensembles (PATE)

This follows the Knowledge Distillation concept that I discussed here: Post 1- Knowledge Distillation, Post – 2 Knowldge Distillation. PATE begins by dividing the data into “k” partitions with no overlaps. It then trains k models on that data and then aggregates the results on an aggregate teacher model. During the aggregation for the aggregate teacher, you will add noise to the data and the output.

For deployment, you will use the student model. To train the student model you take unlabelled public data and feed it to the teacher model and the result is labelled data with which the student model is trained. For deployment, you use only the student model.

The process is illustrated in the figure below:

PATE (Private Aggregation of Teacher Ensembles)

Credits:

- MLOps Specialization [Course 4 | Course 2] at deeplearning.ai

- https://docs.microsoft.com/en-us/azure/machine-learning/concept-differential-privacy

- https://www.linkedin.com/pulse/model-debugging-sensitivity-analysis-adversarial-training-ajay-taneja/